White House isn't giving up on AI regulations ban

Published in Political News

WASHINGTON — The White House is pushing for a moratorium on state artificial intelligence laws either in the annual defense policy bill or through an executive order directing the Justice Department to challenge state laws as unconstitutional or preempted by federal regulations.

But support for including a moratorium in the fiscal 2026 NDAA appeared scarce Thursday, especially without a national AI standard in place, and experts questioned the constitutionality of parts of a leaked draft executive order.

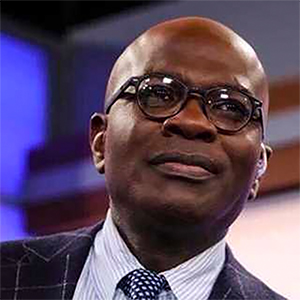

Senate Commerce Chair Ted Cruz, R-Texas, acknowledged pressure from the White House for Congress to move on a moratorium, after Cruz’s own moratorium provision was pulled out of the GOP’s budget reconciliation bill on a 99-1 vote this summer.

“The president is pressing forward,” Cruz told reporters. “We’ll see what happens.”

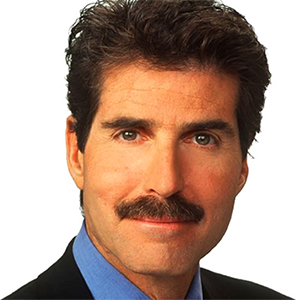

Separately, the outlook for inserting a moratorium in a final NDAA text was uncertain. Earlier this week, House Majority Leader Steve Scalise, R-La., told Punchbowl News that Republican leadership was looking for ways to advance a national moratorium, with the NDAA as a possible target.

Senate Armed Services Chair Roger Wicker, R-Miss., said Thursday, however, that he hasn’t “been focusing on” the issue.

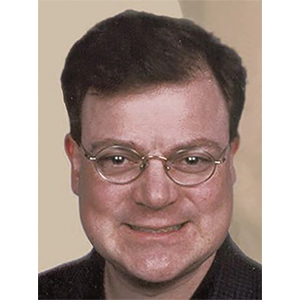

Rep. Rob Wittman, R-Va., who sits on the House Armed Services panel, also cast doubt on a moratorium in the bill. He told reporters, “I think that’s going to be out” of the NDAA.

He acknowledged a desire from the AI sector to avoid a “patchwork quilt” of state regulations, including some states that are “really impeding” the technology.

“But at the same time, you know, you don’t want to replace those things, if you don’t have a really thoughtful framework to put in that [creates] guardrails, right?” he said.

Sen. Mike Rounds, R-S.D., who sits on the Armed Services Committee, took a similar approach.

“The states do a very good job of looking out for their local concerns. And in many cases, or in some cases anyway, we should be able to take some of the ideas that they come up with and perhaps incorporate them into a national policy,” he said.

On the idea of an executive order to control state AI laws, Rounds said he was aware the White House was “exploring” the idea, but questioned what it would look like.

“I’m not sure what they can do, other than strongly recommend to Congress that we actually get to work and actually do some national policies on some of those same issues that are of concern to the states,” he said.

Democratic senators came out against any move to include a moratorium in the NDAA. They emphasized the employment and climate risks of large-scale AI deployment.

Massachusetts Democrats Sens. Edward J. Markey and Elizabeth Warren sent a “Dear Colleague” letter to Senate Democrats on Thursday urging them to oppose a moratorium provision in the NDAA. Warren serves on the Armed Services Committee.

“If included, this provision would prevent states from responding to the urgent risks posed by rapidly deployed AI systems, putting our children, our workers, our grid, and our planet at risk,” the letter said. It added, “President Donald Trump has now endorsed the policy proposal, siding with Big Tech and against states’ rights.”

Sen. Brian Schatz, D-Hawaii, echoed concerns that AI could cause “mass unemployment,” especially in entry-level positions, and emphasized the need for a national standard.

“Even if you’re excited about AI, even the smartest, most pro-AI people think that there is a regulatory framework that has to be established,” he said. “And so it would be one thing if we were to establish a federal standard, and that would preempt the states. But this is just saying that ‘we don’t know what the hell we’re going to do, and none of you all can do anything until we figure it out.’”

White House strategy

A leaked draft order was published by a news outlet on Wednesday, but a White House spokesperson said in a statement that “until officially announced by the WH, discussion about potential executive orders is speculation.”

The draft would not ban state laws on AI but would instead establish a task force within the Justice Department to challenge state laws, “including on grounds that such laws unconstitutionally regulate interstate commerce, are preempted by existing Federal regulations, or are otherwise unlawful.”

The executive order would also require the federal government to develop a list of specific AI laws that make their states ineligible for funding or that the task force should challenge, including those that “require AI models to alter their truthful outputs” or compel certain disclosures from AI developers or deployers.

Earlier this year, California passed the law known as the Transparency in Frontier Artificial Intelligence Act. When it goes into effect on Jan. 1, the law will require large frontier developers to publish AI frameworks to explain how they incorporate standards and best practices. It will also require the developers to file a summary of a catastrophic risk assessment. The law will also establish a reporting mechanism for AI safety incidents. Another California law will require AI developers to publish documentation of the data used to train their models,

The “truthful outputs” section of the draft executive order references a Colorado law that goes into effect Feb. 1 that will require developers and deployers of “high-risk” AI systems — those making consequential decisions — to exercise “reasonable care” to protect users from risks of discrimination, which it defines based on impact rather than intent.

The draft executive order would not establish a national standard on AI.

However, it would direct the Federal Communications Commission, in coordination with David O. Sacks, the president’s special adviser for AI and crypto, to start proceedings to decide whether to adopt a reporting and disclosure standard for AI. It would also direct the Federal Trade Commission, also in coordination with Sacks, to issue a policy statement to explain when the FTC’s prohibition on deceptive acts or practices affecting commerce preempts state AI laws that “require alterations to the truthful outputs of AI models.”

Finally, it would direct Sacks and the Office of Legislative Affairs to prepare a legislative recommendation establishing a national framework for AI.

The order would also direct the Commerce Department to restrict states’ access to nondeployment funding, for community access and other uses, under the $42.5 billion rural internet Broadband Equity, Access and Deployment program, known as BEAD, based on their AI laws.

The White House has long supported pushing against state laws on AI that it deems “burdensome” to the industry. The AI Action Plan released in June directed federal agencies with “AI-related discretionary funding” to “limit funding if the state’s AI regulatory regimes may hinder the effectiveness of that funding or award.”

“The Federal government should not allow AI-related federal funding to be directed toward states with burdensome AI regulations that waste these funds, but should also not interfere with states’ rights to pass prudent laws that are not unduly restrictive to innovation.”

Legal questions

Travis Hall, director for state engagement at the Center for Democracy and Technology, said the strategy behind the executive order is likely based on a lack of presidential authority to preempt state laws.

“I don’t think that there is a dispute that Congress actually does have that authority. The President does not,” he said.

Hall believes the federal government is “probably unlikely to win” cases that claim AI laws that regulate how companies can operate in one state violate the dormant commerce clause — a doctrine limiting states’ ability to regulate interstate commerce. He also questioned whether the federal government would have standing in such a case.

AI companies would have better standing, he said, but wouldn’t need an executive order to bring the suits.

“I do think that it’s telling that for all the concern that companies are expressing about these state laws, they have not brought those cases,” he said.

Hall said that even if lawsuits wouldn’t succeed, he believes the order is meant to “send a chill through state legislators, to have them back away from or be … hesitant about passing any AI regulations, particularly those that protect marginalized communities.”

Cody Venzke, a senior policy counsel at the ACLU, said the draft order discounts the idea that AI used in housing or education can have a discriminatory impact.

“That position has been arrived at despite the fact that research has, has demonstrated this over and over again, that this is something that occurs where AI will reflect existing historical biases in society and economic sectors. Instead, it gets discounted as ‘woke AI.’”

In addition to Colorado, a Texas law that will go into effect Jan. 1 will outlaw developing or deploying AI “with the intent to unlawfully discriminate against a protected class in violation of state or federal law.” It goes on to state that disparate impact is not sufficient to prove intent.

California’s discrimination regulations came through the state’s Civil Rights Council, which clarified the state’s existing anti-discrimination employment rules to cover AI, and to prohibit the use of systems that have an “adverse impact” for protected classes.

“But [in] many instances,” Venzke said, “states are still considering their policy approaches here, and the administration is attacking them, of course, without offering any sort of meaningful alternative.”

Drew Garner, director of policy engagement at the Benton Institute for Broadband & Society, a foundation focused on promoting inclusive broadband policy, said the nondeployment funds threatened by the order are intended to allow states to fund everything from digital skills to workforce development. The approximately $21 billion remaining in the BEAD program, potentially for those uses, is a “very appealing and large source of funding for states,” he said.

“.. By conditioning these funds on an AI moratorium, the administration is essentially taking these nondeployment funds hostage,” he said.

He added that the law that established the BEAD program is clear on how states can use funds.

“I don’t see anything in that structure that allows [the National Telecommunications and Information Administration] to do this, or that allows the administration to do this,” he said.

----------

—Caroline Coudriet and John M. Donnelly contributed to this report.

©2025 CQ-Roll Call, Inc., All Rights Reserved. Visit cqrollcall.com. Distributed by Tribune Content Agency, LLC.

Comments