Commentary: Sam Altman's terrible reason for letting ChatGPT talk to teens about suicide

Published in Op Eds

Last month, the Senate Judiciary Subcommittee on Crime and Counterterrorism held a hearing on what many consider to be an unfolding mental health crisis among teens.

Two of the witnesses were parents of children who’d committed suicide in the last year, and both believed that AI chatbots played a significant role in abetting their children’s deaths. One couple now alleges in a lawsuit that ChatGPT told their son about specific methods for ending his life and even offered to help write a suicide note.

In the run-up to the September Senate hearing, OpenAI co-founder Sam Altman took to the company blog, offering his thoughts on how corporate principles are shaping its response to the crisis. The challenge, he wrote, is balancing OpenAI’s dual commitments to safety and freedom.

ChatGPT obviously shouldn’t be acting as a de facto therapist for teens exhibiting signs of suicidal ideation, Altman argues in the blog. But because the company values user freedom, the solution isn’t to insert forceful programming commands that might prevent the bot from talking about self-harm. Why? “If an adult user is asking for help writing a fictional story that depicts a suicide, the model should help with that request.” In the same post, Altman promises that age restrictions are coming, but similar efforts I’ve seen to keep young users off social media have proved woefully inadequate.

I’m sure it’s quite difficult to build a massive, open-access software platform that’s both safe for my three kids and useful for me. Nonetheless, I find Altman’s rationale here deeply troubling, in no small part because if your first impulse when writing a book about suicide is to ask ChatGPT about it, you probably shouldn’t be writing a book about suicide. More importantly, Altman’s lofty talk of “freedom” reads as empty moralizing designed to obscure an unfettered push for faster development and larger profits.

Of course, that’s not what Altman would say. In a recent interview with Tucker Carlson, Altman suggested that he’s thought this all through very carefully, and that the company’s deliberations on which questions its AI should be able to answer (and not answer) are informed by conversations with “like, hundreds of moral philosophers.”

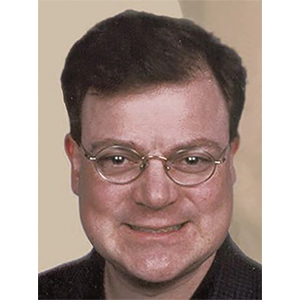

I contacted OpenAI to see if they could provide a list of those thinkers. They did not respond. So, as I teach moral philosophy at Boston University, I decided to take a look at Altman’s own words to see if I could get a feel for what he means when he talks about freedom.

The political philosopher Montesquieu once wrote that there is no word with so many definitions as freedom. So if the stakes are this high, it is imperative that we seek out Altman’s own definition. The entrepreneur’s writings give us some important but perhaps unsettling hints. Last summer, in a much-discussed post titled “The Gentle Singularity,” Altman had this to say about the concept:

“Society is resilient, creative, and adapts quickly. If we can harness the collective will and wisdom of people, then although we’ll make plenty of mistakes and some things will go really wrong, we will learn and adapt quickly and be able to use this technology to get maximum upside and minimal downside. Giving users a lot of freedom, within broad bounds society has to decide on, seems very important. The sooner the world can start a conversation about what these broad bounds are and how we define collective alignment, the better.”

The OpenAI chief executive is painting with awfully broad brushstrokes here, and such massive generalizations about “society” tend to crumble quickly. More crucially, this is Altman, who purportedly cares so much about freedom, foisting the job of defining its boundaries onto the “collective wisdom.” And please, society, start that conversation fast, he says.

Clues from elsewhere in the public record give us a better sense of Altman’s true intentions. During the Carlson interview, for example, Altman links freedom with “customization.” (He does the same thing in a recent chat with the German businessman Matthias Döpfner.) This, for OpenAI, means the ability to create an experience specific to the user, complete with “the traits you want it to have, how you want it to talk to you, and any rules you want it to follow.” Not coincidentally, these features are mainly available with newer GPT models.

And yet, Altman is frustrated that users in countries with tighter AI restrictions can’t access these newer models quickly enough. In Senate testimony this summer, Altman referenced an “in joke” among his team regarding how OpenAI has “this great new thing not available in the EU and a handful of other countries because they have this long process before a model can go out.”

The “long process” Altman is talking about is just regulation — rules at least some experts believe “protect fundamental rights, ensure fairness and don’t undermine democracy.” But one thing that became increasingly clear as Altman’s testimony wore on is that he wants only minimal AI regulation in the U.S.:

“We need to give adult users a lot of freedom to use AI in the way that they want to use it and to trust them to be responsible with the tool,” Altman said. “I know there’s increasing pressure in other places around the world and some in the U.S. to not do that, but I think this is a tool and we need to make it a powerful and capable tool. We will of course put some guardrails in a very wide bounds, but I think we need to give a lot of freedom.”

There’s that word again. When you get down to brass tacks, Altman’s definition of freedom isn’t some high-flung philosophical notion. It’s just deregulation. That’s the ideal Altman is balancing against the mental health and physical safety of our children. That’s why he resists setting limits on what his bots can and can’t say. And that’s why regulators should get right in and stop him. Because Altman’s freedom isn’t worth risking our kids’ lives for.

____

Joshua Pederson is a professor of humanities at Boston University and the author of “Sin Sick: Moral Injury in War and Literature.”

_____

©2025 Los Angeles Times. Visit at latimes.com. Distributed by Tribune Content Agency, LLC.

Comments