Commentary: Wall Street is too complex to be left to humans

Published in Op Eds

A paper from researchers at hedge fund AQR Capital Management and Yale University addresses one of the most important questions in finance: Will artificial intelligence and machine learning replace human researchers and traders?

In 44 pages of densely written theory and empirical results under the title “The Virtue of Complexity in Return Prediction,” Bryan Kelly, Semyon Malamud and Kangying Zhao claim that more complex models — far too complex for humans to process — outperform simpler models. As Bloomberg News reports, the backlash was swift, with at leastsix papers challenging the findings., which Kelly has subsequently defended.

How will this shake out? My money is on Kelly and his co-researchers. The theoretical arguments are dauntingly technical, but the basic question is ancient and easy to understand.

One approach to prediction is to look for a few key indicators with clear casual links to the thing you want to predict and combine them in simple ways. To predict next month’s stock market return, for example, you might look at this month’s return, interest rates, price-earnings ratios and similar variables. Everything else is treated as random noise to be ignored. The problem with using too many indicators, or combining them in overly complex ways, is “overfitting.” You get a model that explains the past perfectly, and the future not at all. You’ve built a model that exploited noise in the past to explain everything, but those noise relations will not persist in the future.

If your interest is understanding or explaining things, the simple approach above is clearly the way to go. But if you’re only interested in prediction, there’s another way: Throw every imaginable indicator into the model (the technical term is “kitchen sink model”) and try every complex combination. If stocks with “V” in their ticker symbol tend to go up on rainy Tuesdays, that’s in your model. The idea is even if an indicator has no predictive value, it does not hurt your predictions; it just adds noise. You can put everything in and then shrink the noise afterward or do lots of trading so the noise is diversified away.

The debate sparked by the paper is more nuanced than that illustration. Kelly and the others are not throwing every possible indicator into their model, but merely 15 variables with 12 monthly values each — 300 total — from which they fit 12,000 parameters to predict next month’s stock market return. They’re not using letters in ticker symbols nor the weather on Tuesday. Their opponents are not arguing for only the simplest models; they just deny that complexity is always a virtue.

A very similar debate took place half a century ago in the context of roulette. In the early 1960s, Ed Thorp, the mathematics professor who came up with blackjack card counting, and Claude Shannon, the father of information theory, built the world’s first wearable computer to predict roulette spins. Prior systems for beating roulette relied on tabulating past results to find numbers that came up more often than others. Many people argued that roulette wheels were too well machined to get a useful advantage from that.

Thorp’s key insight was that if roulette wheels were built precisely enough that each number came up with the same frequency, they had to be predictable. His initial work showed that a roulette spin had two phases. When the ball was rotating against the outer rim of the bowl — the ball track — and the wheelhead (the moving part with all the numbers) was rotating in the opposite direction, the system was governed by simple Newtonian physics.

If you knew the speed of the ball and wheelhead and the coefficients of friction, it was simple to predict what number would be under the ball when it left the track and dropped down to the wheelhead. Once the ball exited the track, deflectors, spin and bounces make its motion chaotic and hard to predict. Nevertheless, just knowing what number was under the ball when it left the track allowed you to identify one third of the wheel which the ball would land in 40% of the time — more than enough for profitable betting.

This led to one of the core principles for quant investors: Opportunity consists of finding the predictability of things other people treat as random, and the uncertainty in things other people treat as deterministic. By the 1970s, building a wearable roulette computer and proving that it worked was one of the rites of passage for quants. (1) Improvements in technology led to vast improvements in accuracy and reliability.

By the time I tried my hand at this in the mid-1970s, the field had split. One group, the physicists, put their energy into improved measuring devices. They used complex equations to process the relevant data using causal models derived from physics. I was inclined toward the other group, the statisticians. We used primitive versions of machine learning algorithms to exploit patterns. We wanted to take advantage of not just of deterministic factors assuming a perfect roulette wheel, but patterns from imperfections like some number slots being a little softer or harder than others, or the wheel being not quite horizontal. We measured many more factors than the physicists but with less accuracy on each, and we crunched lots of data that might have seemed irrelevant.

The two groups had arguments quite similar to the current one on the virtue of complexity. The physicists’ big advantage was devices that required little or no training for individual wheels, since they relied on universal physical law rather than imperfections of individual wheels. Our advantages were low cost and higher prediction accuracy — especially in the sloppiest casinos with cheaper wheels and lax maintenance — at the cost of needing hours of calibration before betting became profitable. (2)

I’ve been betting on complexity over theory for 50 years, and prediction over understanding. I’ve long felt that machine learning and artificial intelligence will replace human analysts and traders (as well as human drivers, doctors, lawyers and scientists among many others). Winning machine learning and AI algorithms will find their own patterns from as much data as possible, rather than being guided by humans to select relevant data and impose a priori theoretical constraints on answers. But I’m often wrong, so don’t put all your money on the number my roulette computer likes best.

____

(1) By the way, these devices were arguably legal at the time. Casinos generally had rules against devices like magnets that affected results, but not against devices that merely predicted them. In 1985 Nevada outlawed prediction devices. Other jurisdictions, such as the UK, leave it up to individual casinos but there are still plenty with no rules against them.

(2) Well-run casinos switch wheelheads and bowls around every night so we couldn’t rely on yesterday’s calibration for today’s betting.

____

This column reflects the personal views of the author and does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners.

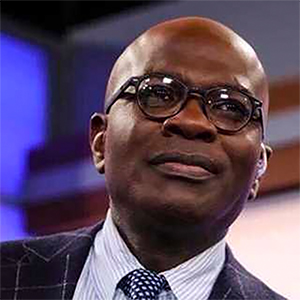

Aaron Brown is a former head of financial market research at AQR Capital Management. He is also an active crypto investor, and has venture capital investments and advisory ties with crypto firms.

©2025 Bloomberg L.P. Visit bloomberg.com/opinion. Distributed by Tribune Content Agency, LLC.

Comments